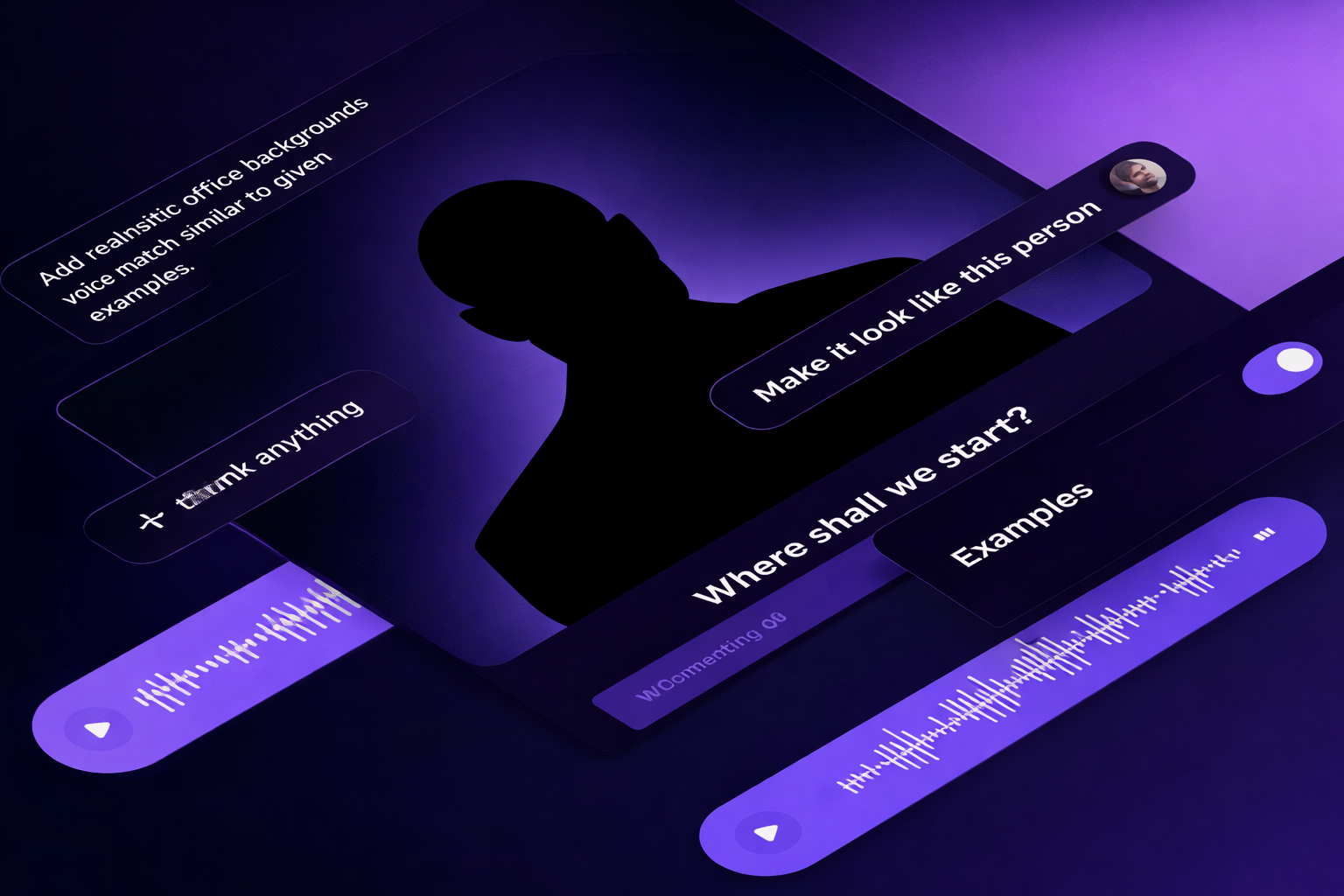

Deepfake phishing has rapidly emerged as one of the most dangerous cybersecurity threats in 2026. Unlike traditional phishing attacks that rely on poorly written emails or suspicious links, deepfake phishing uses artificial intelligence to convincingly impersonate real people—CEOs, managers, clients, or even family members—through voice, video, and hyper-realistic images. As AI tools become more accessible, cybercriminals are weaponizing them to bypass human trust and technical defenses alike.

This guide explains how deepfake phishing works, why it’s so effective, and—most importantly—how individuals and organizations can defend against it in 2026.

What Is Deepfake Phishing?

Deepfake phishing is an advanced form of social engineering that leverages AI-generated audio, video, or images to manipulate victims into taking harmful actions. These attacks often include:

- Voice deepfakes mimicking executives requesting urgent wire transfers

- Video deepfakes impersonating leadership during virtual meetings

- AI-generated emails written in the exact tone and style of trusted contacts

Unlike traditional phishing, deepfake attacks exploit psychological trust, making them far harder to detect.

Why Deepfake Phishing Is Exploding in 2026?

Several factors have fueled the rise of deepfake phishing:

- Cheap and powerful AI tools – Voice cloning and video synthesis now require minimal technical skill

- Remote work culture – Reduced face-to-face verification increases vulnerability

- Data abundance – Social media provides attackers with ample voice, image, and behavioral data

- Faster attack execution – AI automates personalization at scale

According to global cybersecurity analysts, deepfake-driven fraud losses are projected to surpass traditional phishing losses by the end of 2026.

Common Deepfake Phishing Scenarios

Understanding real-world attack patterns is critical:

- CEO Fraud (BEC Attacks): Employees receive a voice call from a “CEO” demanding an urgent payment

- Vendor Impersonation: Fake video calls request changes in banking details

- HR & Payroll Scams: AI emails or calls ask employees to “verify” sensitive data

- Customer Support Fraud: Deepfake voices impersonate customers to bypass authentication

How to Defend Against Deepfake Phishing in 2026?

1. Implement Multi-Factor Human Verification

Never rely on a single communication channel. High-risk requests should require out-of-band verification, such as callbacks, internal chat confirmation, or secondary approval workflows.

2. Train Employees on AI-Based Threats

Security awareness training must evolve. Employees should be trained to:

- Question urgency and emotional pressure

- Recognize AI-generated speech patterns

- Follow strict verification protocols—even for executives

3. Use AI-Powered Security Tools

Modern antivirus and cybersecurity platforms now integrate:

- Deepfake voice detection

- Behavioral anomaly analysis

- AI-based email and call filtering

These tools identify subtle inconsistencies humans may miss.

4. Strengthen Identity and Access Controls

Adopt zero-trust security models where no request is automatically trusted. Limit privileges and enforce role-based access to reduce damage if an attack succeeds.

5. Establish Clear Internal Policies

Document and enforce policies such as:

- No financial or credential changes via voice/video alone

- Mandatory written confirmation for sensitive actions

- Escalation procedures for suspicious communications

The Role of AI in Fighting Deepfake Phishing

Ironically, AI is also the strongest defense against AI-driven threats. Advanced cybersecurity solutions now use machine learning to:

- Detect synthetic audio artifacts

- Analyze facial micro-expressions in video

- Identify language patterns unique to AI-generated content

In 2026, organizations that fail to adopt AI-driven defenses are significantly more vulnerable to deepfake phishing attacks.

Final Thoughts

Deepfake phishing is no longer a future threat—it is a present reality. As attackers grow more sophisticated, cybersecurity strategies must evolve beyond traditional awareness and basic tools. A combination of human vigilance, AI-powered security, strict verification processes, and proactive policies is essential.

Organizations that take deepfake phishing seriously today will protect not only their finances but also their reputation, customer trust, and long-term business continuity.