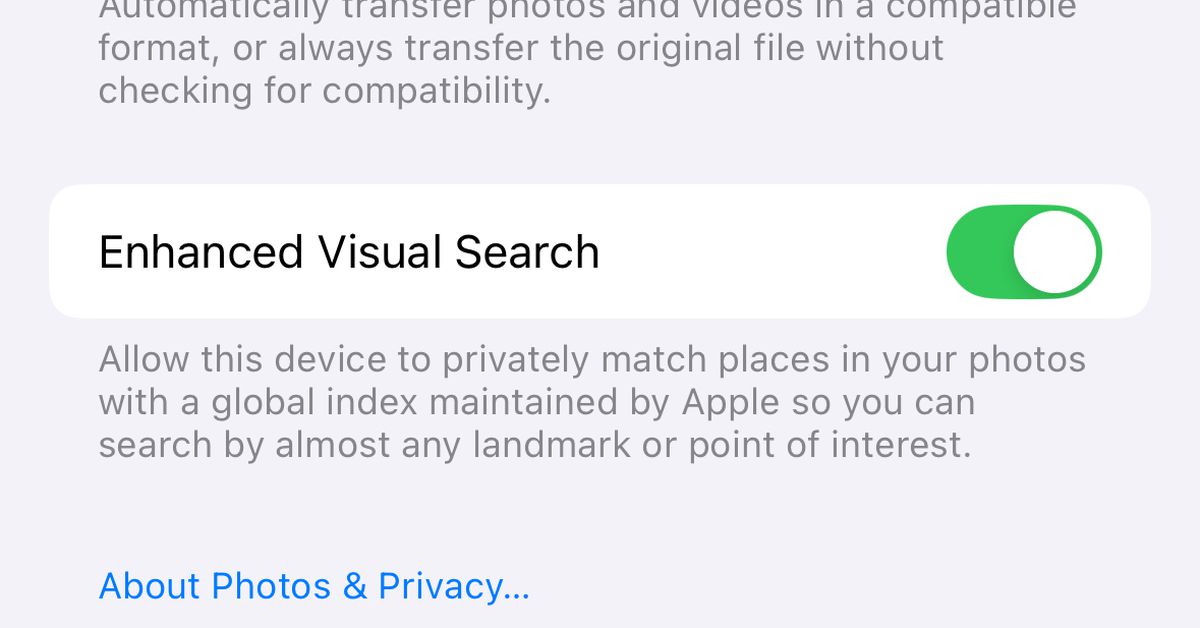

Sure enough, when I checked my iPhone 15 Pro this morning, the toggle was on. You can find this yourself by going to Settings > Photos (or System Settings > Photos on Mac). Advanced visual search lets you view locations you've taken photos of or search for images using the location names.

To see what it enables in the Photos app, swipe up on a photo taken of a building and select “Look up landmark” and a card will appear that ideally identifies it. Here are some examples from my phone:

At first glance, this is a convenient extension of Apple's Photos' Visual Look Up feature Introduced in iOS 15 Which allows you to identify plants or find out which ones they are What do the symbols on laundry tags mean?But Visual Look Up doesn't need special permission to share data with Apple, and it does.

A description under the toggle says you're allowing Apple to “privately match locations in your photos with a global index created by Apple.” How, its details are in a Apple Machine-Learning Research Blog Regarding the advanced visual search that Johnson links to:

The process begins with an on-device ML model that analyzes a given photo to determine if there is a “region of interest” (ROI) that might contain a landmark. If the model detects an ROI in the “landmark” domain, a vector embedding is computed for that region of the image.

According to the blog, that vector embedding is encrypted and sent to Apple to compare with its database. The company provides a very technical explanation of vector embeddings a research paperBut IBM puts it more simplyWriting that embedding “transforms a data point, such as a word, sentence, or image, into a n– dimensional array of numbers representing the characteristics of that data point,

Like Johnson, I don't fully understand Apple's research blogs, and Apple did not immediately respond to our request for comment about Johnson's concerns. It seems as if the company put a lot of effort into keeping the data private, partly by condensing the image data into a format that is legible to ML models.

Still, making toggles opt-in, such as for sharing analytics data or recordings or Siri interactions, rather than requiring users to search for something, seems like it would have been a better option.